About

Projects

Experiences

Links

As part of my Master's degree in Computer Science at the University of Bordeaux, I developed and documented an autonomous vision system for a football robot in the SSL league, in response to the SSL Vision Blackout challenge. The objective is to develop a robot capable of playing football without the help of external cameras, relying solely on its own sensors and computer vision algorithms. Technologies and methods used are the following:

Results:

Live demonstration of the computer vision

This project is part of the RoboCup competition, specifically the SSL (Small Size League). The goal is to develop a football robot capable of playing autonomously, without the help of a global vision of the field. Unlike traditional systems that use cameras overlooking the field, our robot is equipped with an onboard camera that provides a limited view of its immediate environment.

Relying on specific hardware (field, robots, camera, Nvidia Jetson Nano board), we defined several key steps to successfully complete this project: Ball detection and interaction: The robot must locate the ball in its field of vision and perform simple actions like pushing or shooting it. Goal scoring: The robot must identify the opponent's goal and execute precise shots, taking into account the presence of a goalkeeper or not. Autonomous navigation: The robot must be able to move to specific positions on the field using only its onboard camera.

During this project, we faced several hardware limitations: The limited computing power of the Jetson Nano board and the image quality of the camera required algorithmic optimizations. Complexity of computer vision: Detecting moving objects in a dynamic environment is a complex task, especially due to lighting variations and occlusions. Coordination between the different components of the robot (camera, motors, electronic board) required special attention. To meet these challenges, we combined computer vision techniques (object detection, motion tracking) with control algorithms to drive the robot. We also conducted numerous field experiments to refine our models and take into account the specificities of the real environment.

Computer vision: The YOLOv8 model was chosen for its ability to detect objects of interest (ball, goal, robots) in real-time in the images captured by the onboard camera. This model was trained on a custom dataset to optimize detection performance in the specific context of the football field.

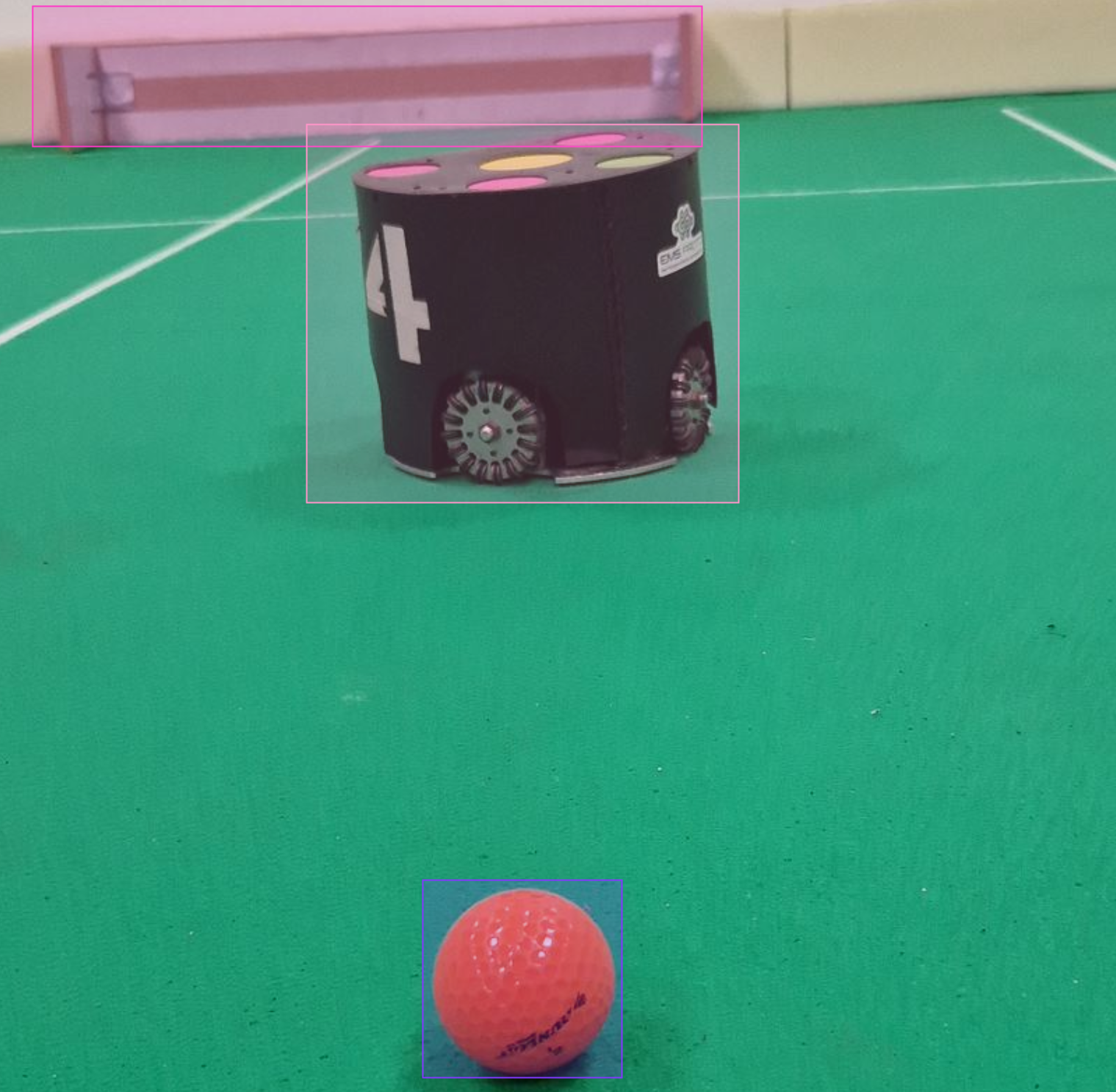

Image annotation: An example of image annotation is illustrated below, showing how objects of interest are marked for training the YOLOv8 model, done with the MAKESENSE.AI tool.

Annotation exemple

Image processing: The images captured by the camera are preprocessed to improve detection quality (noise reduction, contrast enhancement).

Robot control: A custom API was developed to interact with the RSK robot. This API allows controlling the robot's movements, managing speed and direction.

Programming language: Python was chosen for its simplicity and large community. Libraries such as OpenCV, NumPy, and PyTorch were used for image processing, numerical calculations, and deep learning.

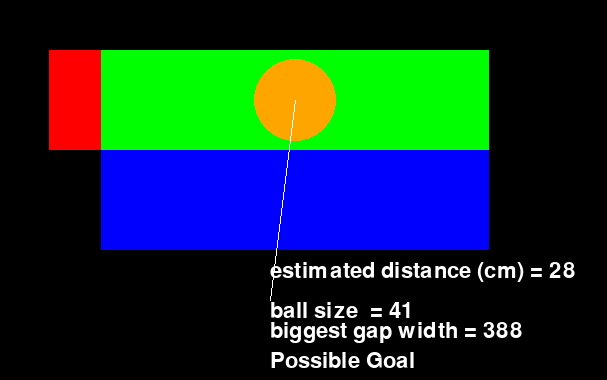

Finally, with each image processing done, and our trained AI, we also created interfaces to test our goal-shooting algorithms, using tools like Pygame.

Goal decision represented with PyGame

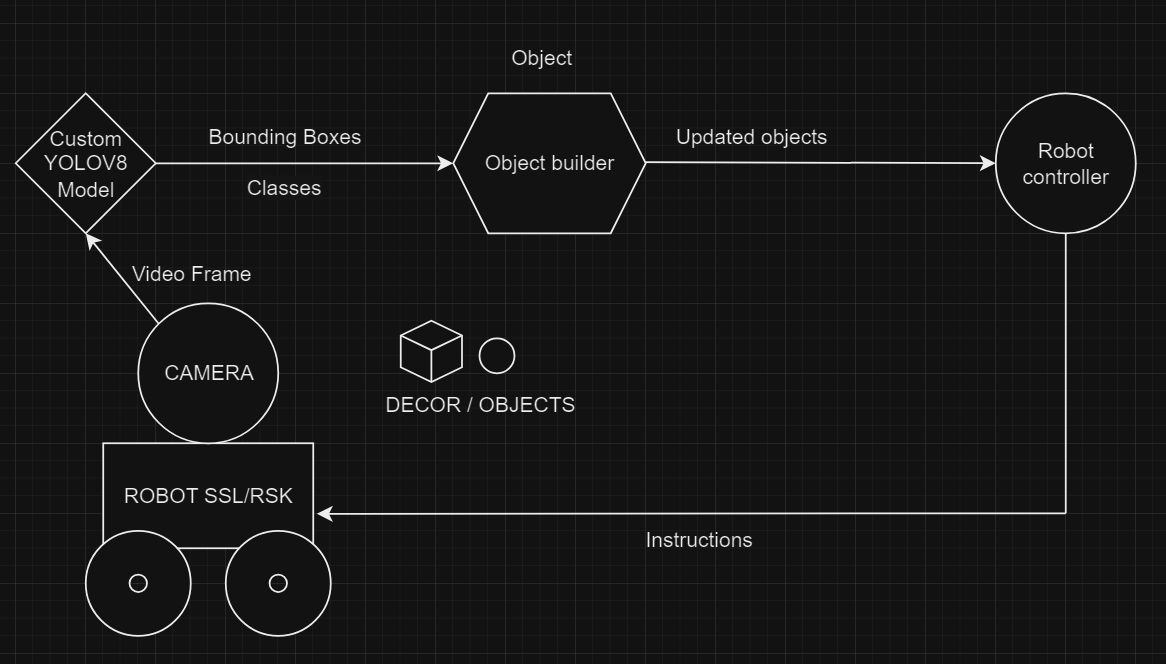

At the heart of the system is a main execution loop that manages the flow of information and triggers actions based on camera data. Image processing is handled by the YOLOv8 model, while robot control is achieved using a custom API. Unit tests were implemented to ensure code quality.

Flowchart representing the information loop

Tests were conducted at different levels:

The results obtained show that the developed system is capable of performing the assigned tasks with satisfactory reliability. However, improvements are still possible, particularly in terms of object detection accuracy and robot reaction speed.

To achieve these objectives, several methods were used. First, for object detection, we used YOLOv8 to detect the ball, goals, and goalkeeper. We then needed to perform trajectory calculations to enable our robot to shoot. For angle and distance calculations, we used the position and size of the bounding boxes to determine them. The robot's movements were simply done with a custom API to control it. Finally, to determine in which areas of the goal the robot should shoot, we once again used the bounding boxes, this time overlaying them to identify the available areas of the goal. The objectives abandoned during the project included passing the ball from one robot to another. We prioritized the various goal-shooting objectives, and due to issues with other group members who were not active, it seemed more important to present these functionalities.

Demonstration video of the project